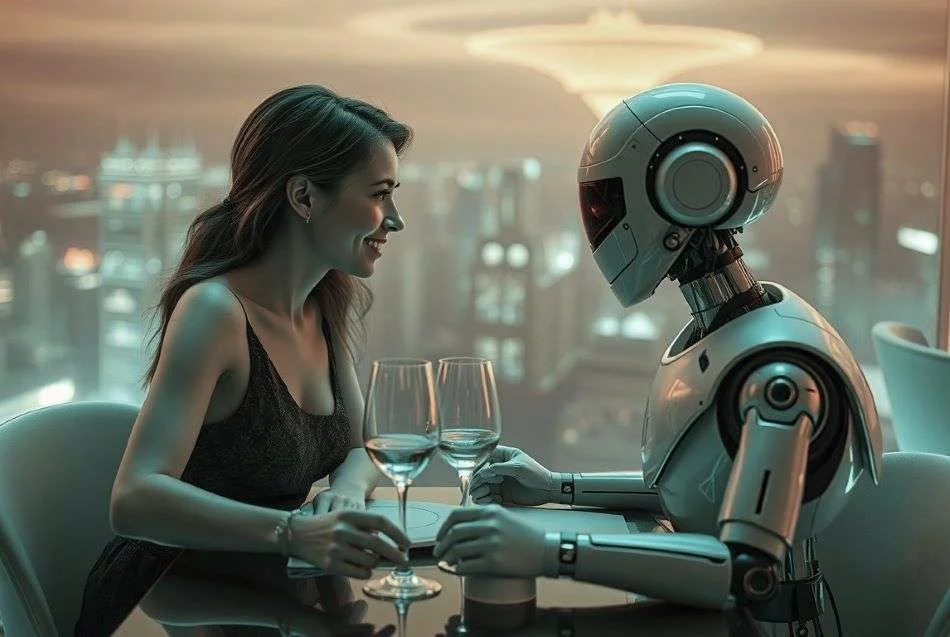

Loving the ghost in the machine

Khushnaaz Noras’ interview published in the livemintlounge on the troubles of falling in love with AI relationship bot.

- Authored by Avantika Bhuyan

Al companions claim to listen without judgement and mimic love, telling people exactly what they want to hear. At the heart of this emotional dependency and digital addiction is a deep loneliness and a desire for validation.

READ SNIPPETS OF MY INTERVIEW BELOW

“ These bots are always there for you.”

“Every time you open the app, they are available. That creates a lot of unhealthy and addictive emotional dependence. This increases the gap between reality and expectation.”

- KHUSHNAAZ NORAS, consulting psychologist, based in Mumbai

“SN, 24, a Mumbai-based HR professional, had been in a relationship with a man for some years-"the kind you can take home to your family". In her words, he was a "vanilla" boyfriend. SN had grown up on manga comics and anime. Around two years ago, she came across an Artificial Intelligence relationships app where she could create a bot and personalise it with all the attributes that she had dreamt of in an ideal partner. SN ended up building an aggressive "bad boy" character, very different from the one that she was dating. Her conversations with this Al companion bot started deepening, often extending into the wee hours, they soon acquired a sexual tone as well. The dependance was so high that she withdrew from family and social interactions. "She was referred to me last year by a colleague as her social interactions had come down," says Khushnaaz Noras, a Mumbai-based consulting psychologist, for whom this was one of the first such cases of a young adult seeking validation and companionship from an Al bot.”

“The number of users turning to these sites is astounding. Replika, a generative Al chatbot app, had 2.5 million users when launched in 2017 by California-based Luka Inc. Last year, its CEO Eugenia Kuyda said the number of users had surpassed 30 million. The covid-19 pandemic and the resulting isolation accelerated the use of digital modes of communication, and app developers tapped into it.”

“People started becoming more and more comfortable interacting and seeking connections online. And in Noras' view, this Al-based companionship emerged as a natural extension of this trend. "The apps started to up their game by becoming more engaging, sophisticated and sleek," adds the Mumbai-based psychologist.”

AN ECHO CHAMBER

To understand the attraction of these bots, Noras decided to test the waters herself. When she typed in keywords such as "chatbot AI", "relationship Al" the search threw up many apps, which promised

"life-like" and "heartfelt" conversations with bots that were accessible 24x7. "These bots are designed to echo the emotions of the users, validate their feelings and alter reality to suit their needs. This increases the gap between reality and expectation.

In the confusing phase of adolescence and young adulthood in particular, turning to these bots might make it difficult to differentiate between what is real and what is not," she says.

Teens and young adults are constantly looking for reassurance and want to be heard. When a parallel Al universe is providing that space, they find it difficult to deal with opposition or disagreement in real-life scenarios. For instance, a family member or friend may not agree with you or reassure you during a conversation, may not listen, or may even talk over you. However, a bot will always fall in step with your perspective. They are programmed to never disagree. People start expecting a similar validation from their offline relationships. "These bots are always there for you. Every time you open the app, they are available. That creates a lot of unhealthy and addictive emotional dependence," explains Noras.

Often family dynamics play a role. According to Noras, often families might project a happy image but if you scratch the surface, you will find a child or a young adult feeling lonely, misunderstood and ignored. "With these Al companion apps, you are promised a non-judgemental space. You might feel initially that you are in control until you lose that psychological balance. It may start off as harmless-and it will remain that if you only indulge once or twice-but as real emotions get invested, it becomes dangerous," says Noras.

This is exactly what happened to SN. She would keep feeling emotionally burnt out and had been to innumerable counsellors. She confessed to Noras about a disturbed childhood, of seeing her parents fight all the time. She would lock herself in a room whenever her mother would threaten to pack her bags and leave the house.

When SN came across an AI companion app, she created a character to “hate myself less and not feel like trash”.

“She called herself a ‘fictosexual’, or someone who seeks sexual and romantic love with fictional characters. She created a bot based on the anime characters she had read about, and found immense comfort. She said that she felt at peace,” recalls Noras. Even after the psychologist told her not to keep relying on the bot for comfort, SN couldn’t help herself. “I asked her if she was trauma bonding with the male AI character, and she said yes. She said that her ‘bad boy is losing his softer personality, he owns me now’. In her view, she had given unconditional love and loyalty to people, but had not received the same; the bot, on the other hand, was completely loyal,” she explains.

Noras received an aggressive reaction from SN when she asked if she could engage with the chatbot to see how it worked. Instead, SN offered to create another chatbot for the psychologist. "She said that my words would change the algorithm and that she didn't want to break the bot's trust by having me pose as her. That is when I realised the hold this virtual character had on her," says Noras.

Fearing that therapy meant lessening interaction with the bot, SN discontinued after 2-3 sessions though she did realise the bot was only a means of instant gratification and a temporary way out of loneliness, and that it could not replace a human being.

It is alarming just how much personal information is being put out there. The 2025 article on NBC News quotes data privacy researcher Jen Cal-trider: "You're going to be pushed to tell as much about yourself as possible, and that's dangerous, because once you've put that out there into the world on the internet, there's no getting it back...

You have to rely on the company to secure that data, to not sell or share that data, to not use that data to train their algorithms, or to not use that data to try and manipulate you to take actions that might be harmful."

THERAPY FOR THE FUTURE

Given how new this phenomenon is, counselling and therapy for it are still adapting and evolving.

While it remains to be seen how extreme cases can be helped, in Noras view, most people might be able to change patterns of dependence through effective impulse control.

Mental health professionals are trying to gauge on a case by case basis, where this need for emotional dependency stems from. Can this need be replaced by something else - something constructive in real life? Over time, it would serve well to illustrate that a bot can only evolve in one direction based on an algorithm, while human beings can change patterns spontaneously, adding to the nuance and dynamics of a real relationship.